As labour shortages continue to bite, food processors are backing AI-powered vision systems to automate the last remaining manual operations on packaging and production lines. For the first time ever, breakthrough solutions that overlay 3D machine vision with AI are enabling tasks such as vegetable and fish processing to be performed with unprecedented accuracy and repeatability. A pioneer in harnessing 3D robot vision for solving complex problems, Scorpion Vision Ltd is at the forefront of this movement with its 3D+AI technology.

People often assume that machine vision is primarily used in the food industry for inspection purposes, but actually, with the current labour crisis, the big opportunity for vision lies in automation applications. Scorpion’s 3D Neural Camera, deployed in tandem with robotics, opens up new opportunities to automate labour-intensive cutting and processing operations whilst enhancing product appearance and reducing waste.

Paul Wilson, Managing Director at Scorpion Vision Ltd, says: “Since the labour crisis escalated several years ago, food businesses have been clamouring to automate manual operations. This started with the ‘low hanging fruit’, the easy-to-tackle and established applications, but now, as the situation becomes increasingly desperate, food processors are shifting their focus to those final ‘outposts’ of human intervention.”

He continues: “With the UK entering its main harvest season, the fresh produce industry in particular is feeling the pressure. We have worked closely with a number of vegetable growers and processors to deliver AI-optimised vision systems to replace human trimmers and see great potential for the broader application of this technology as well as further development in this area – this is only the tip of the iceberg in terms of what can be achieved by combining AI with 3D vision.”

On food lines, the main operations that are still performed by humans are the inspection and trimming of fresh produce, including fruit, veg, meat and seafood. Completing these tasks requires two eyes, a brain and a handheld knife. This is because no two products are identical, so there is no unique and guaranteed reference point, which is normally the basis for all vision systems.

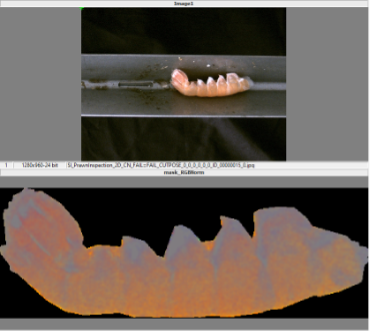

The key to overcoming this challenge is the application of AI, as vision systems can be taught to work with variability without it impacting on inspection performance or accuracy. By applying AI to machine vision in its 3D Neural Camera, Scorpion has succeeded in achieving a very high level of repeatability, which is the key to high yield and minimal wastage. This solution has been tried and tested in a range of applications, from topping and tailing corn on the cobs, swedes and leeks, to de-coring lettuce, removing the outer leaves from sprouts and de-shelling seafood.

The Scorpion 3D Neural Camera builds up a profile of a foodstuff in 3D and analyses it for reference features to create an approximation of what the vision system is looking for. AI is then applied to enhance feature extraction through ‘deep learning’.

Paul explains what this means: “Neural networks are the machine equivalent of brain neuron networks. Just as neurons transmit signals and information to different parts of the brain, the neural network uses interconnected nodes to teach computers how to process images. This is what is termed ‘deep learning’. If you give an AI-optimised vision system an approximation of what you are looking for by showing it examples, the neural network makes the connections. Effectively it is an elegant method of pattern matching.”

With 3D machine vision alone up to around 80% reliability is achievable in trimming and inspection applications; when it is overlaid with AI, that figure is close to 100%.

Employing AI in fish, seafood and vegetable processing has provided the missing link that is needed to provide a high level of repeatability with subject matter that does not conform. Until very recently, picking up a vegetable and manipulating with two hands whilst looking at it to make a decision on what to do with it was the domain of the human. Now, it can be done with 3D+AI and a good robot.